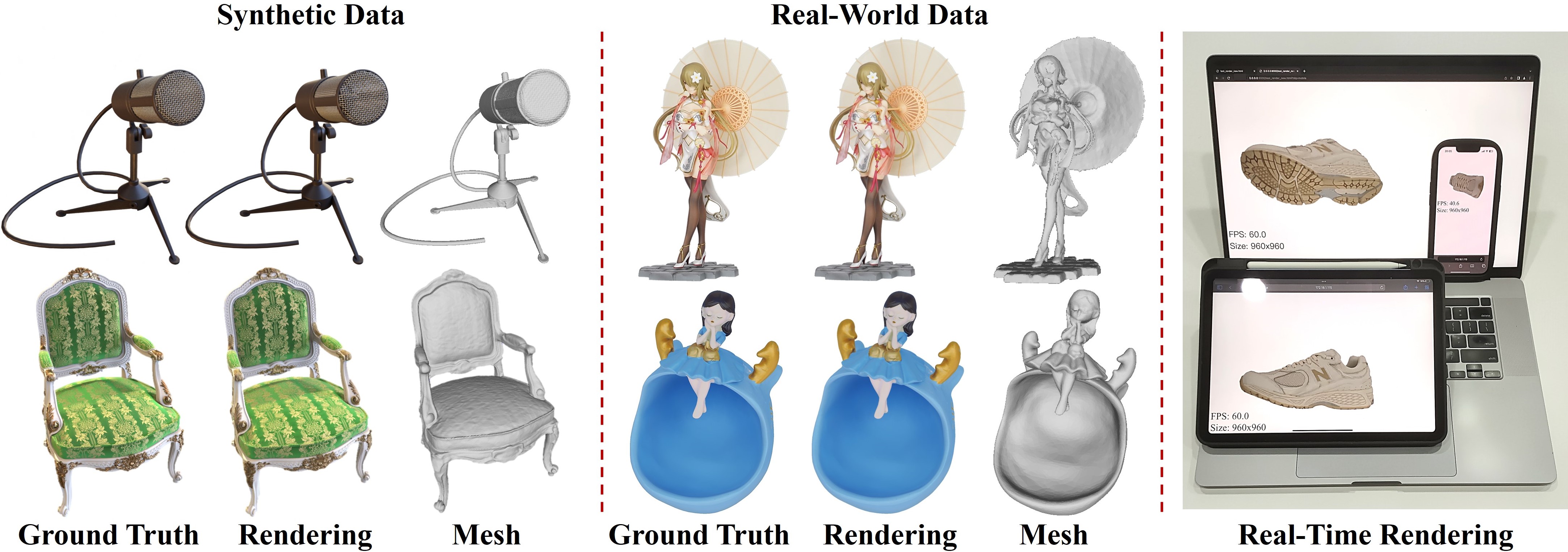

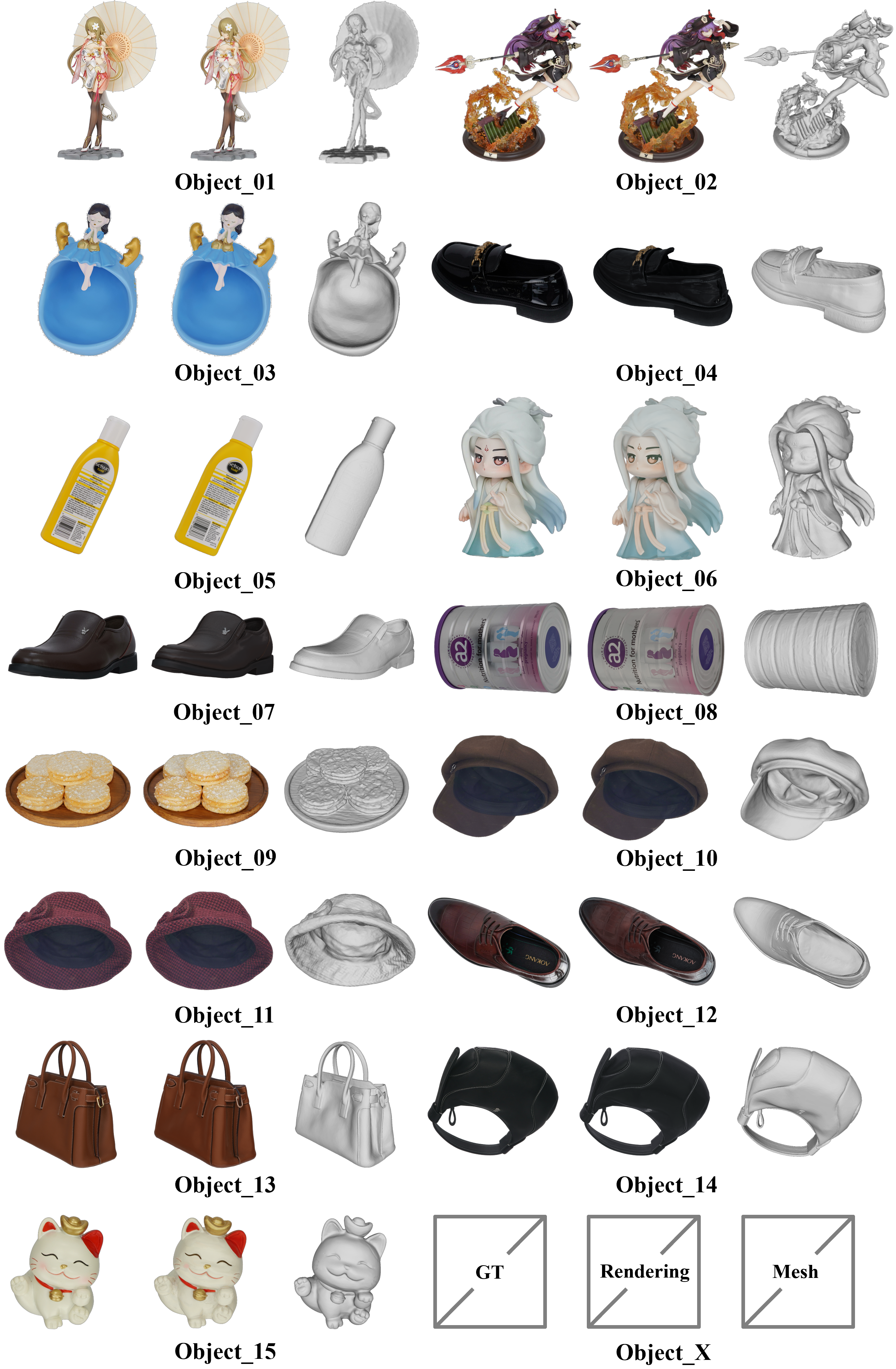

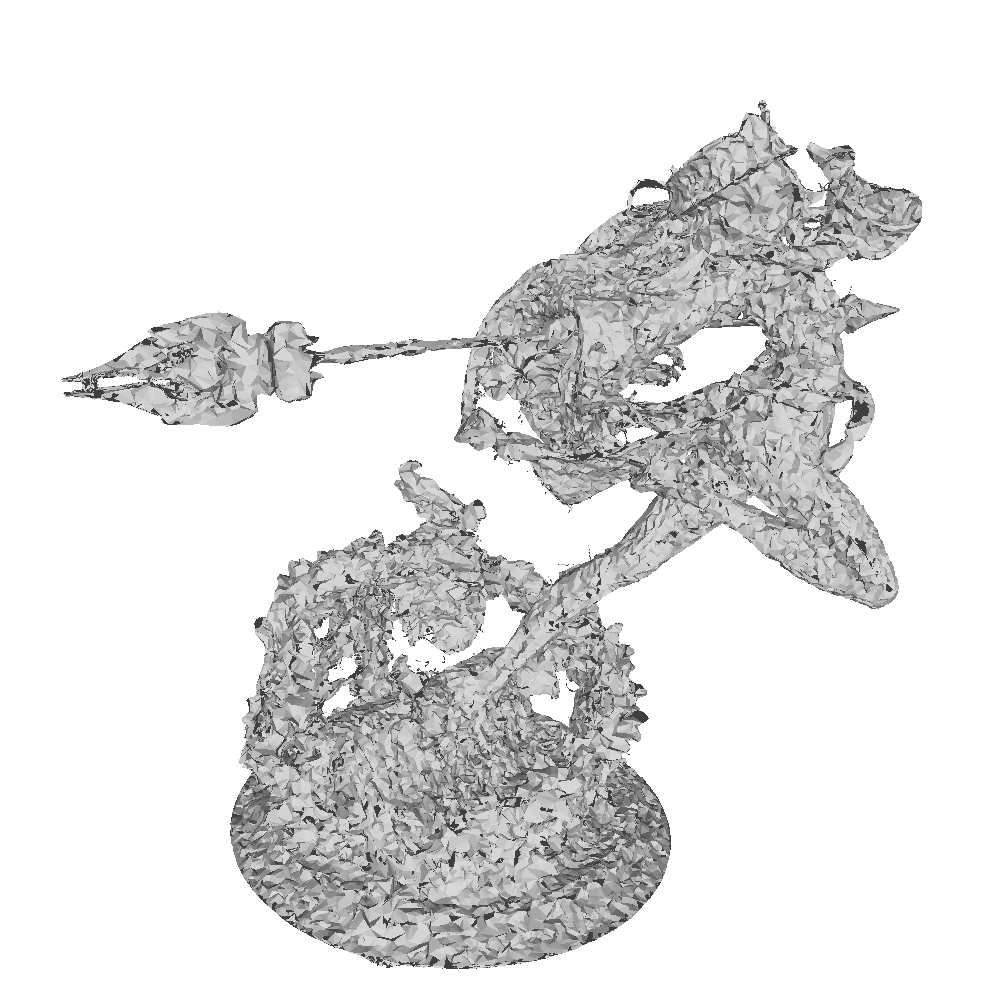

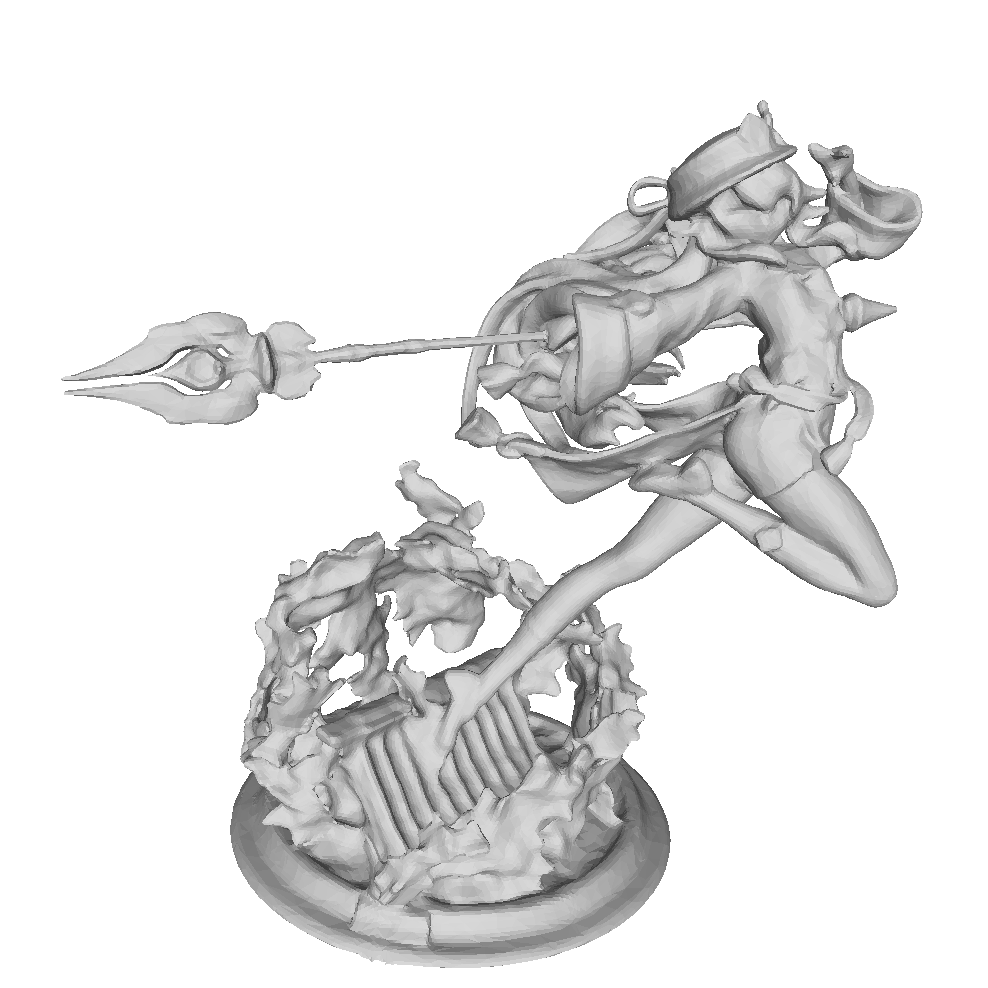

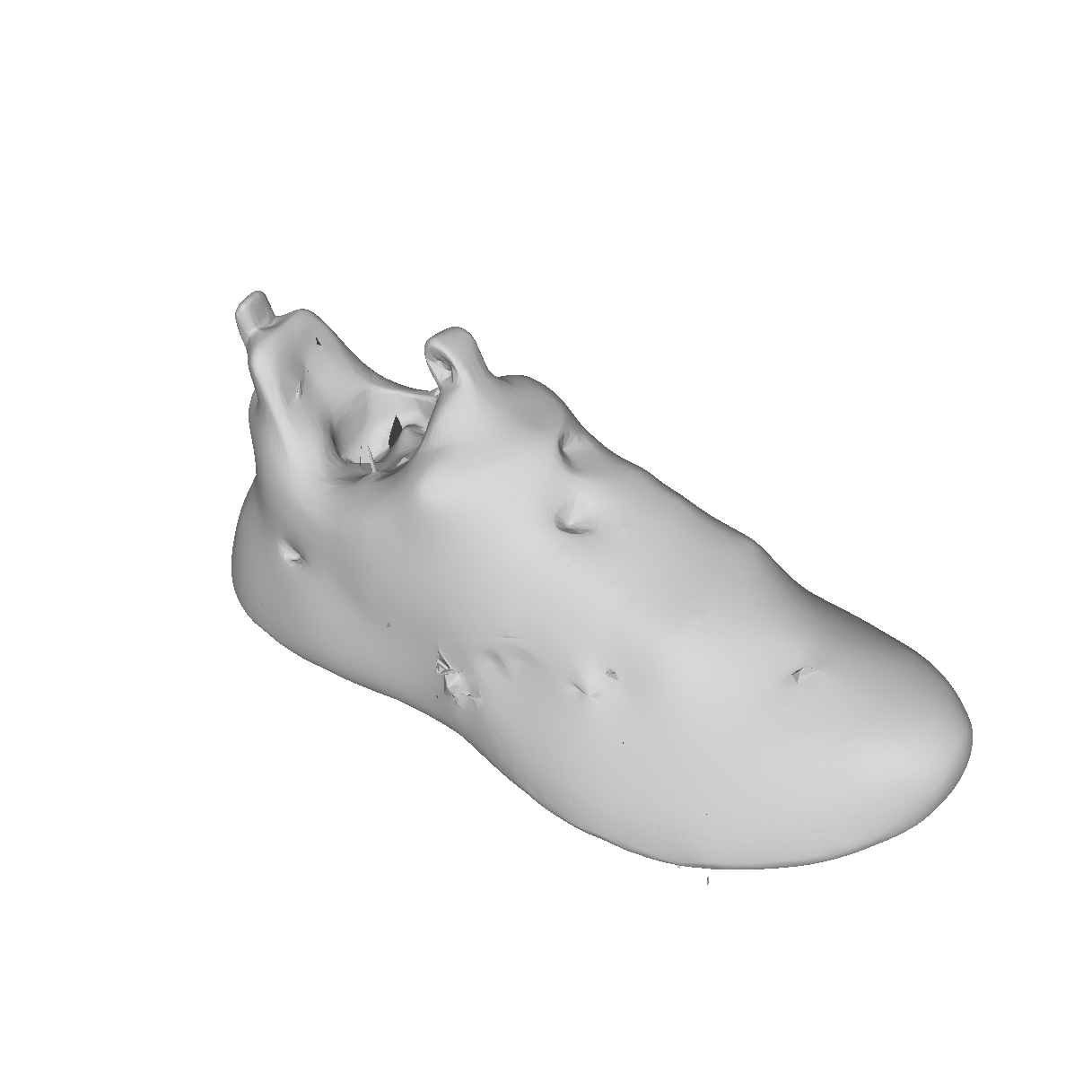

EvaSurf can reconstruct high-quality appearance and accurate mesh on various devices in real-time for both synthetic and real-world objects.

EvaSurf can reconstruct high-quality appearance and accurate mesh on various devices in real-time for both synthetic and real-world objects.

Reconstructing real-world 3D objects has numerous applications in computer vision, such as virtual reality, video games, and animations. Ideally, 3D reconstruction methods should generate high-fidelity results with 3D consistency in real-time. Traditional methods match pixels between images using photo-consistency constraints or learned features, while differentiable rendering methods like Neural Radiance Fields (NeRF) use differentiable volume rendering or surface-based representation to generate high-fidelity scenes. However, these methods require excessive runtime for rendering, making them impractical for daily applications. To address these challenges, we present EvaSurf, an Efficient View-Aware implicit textured Surface reconstruction method. In our method, we first employ an efficient surface-based model with a multi-view supervision module to ensure accurate mesh reconstruction. To enable high-fidelity rendering, we learn an implicit texture embedded with view-aware encoding to capture view-dependent information. Furthermore, with the explicit geometry and the implicit texture, we can employ a lightweight neural shader to reduce the expense of computation and further support real-time rendering on common mobile devices. Extensive experiments demonstrate that our method can reconstruct high-quality appearance and accurate mesh on both synthetic and real-world datasets. Moreover, our method can be trained in just 1-2 hours using a single GPU and run on mobile devices at over 40 FPS (Frames Per Second), with a final package required for rendering taking up only 40-50 MB.

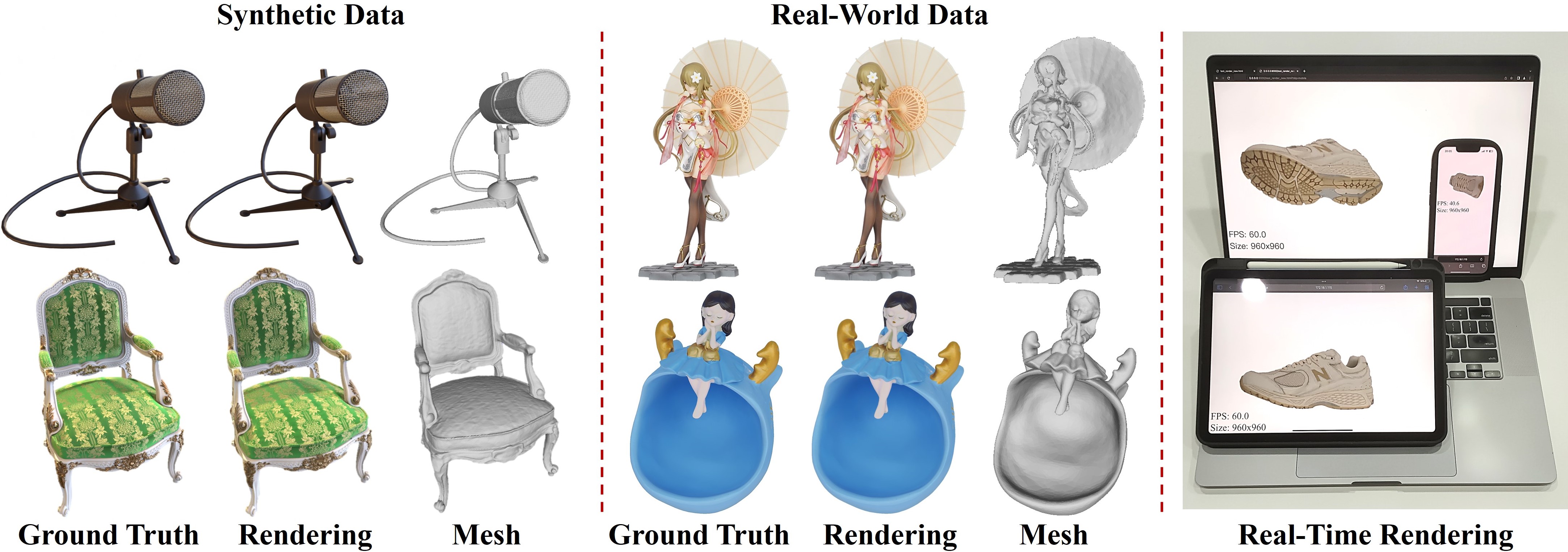

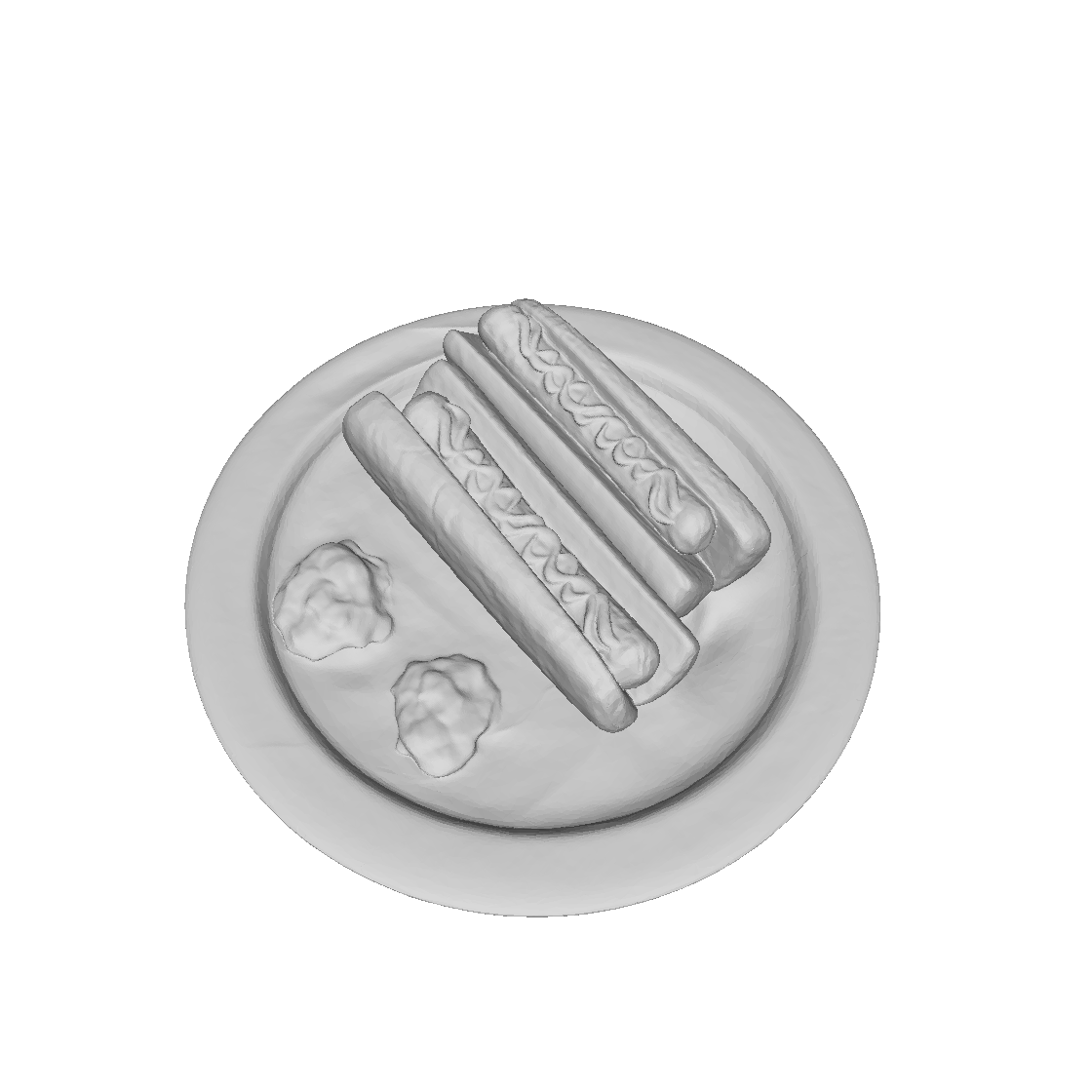

To demonstrate the effectiveness of our method, we also construct experiments on a set of real-world high-resolution (2K) data. This dataset comprises 15 objects, with each object consisting of over 200 images. These images are captured by accurate camera poses, specifically, we provide 3 types of camera poses for each object following the convention of Colmap (Sparse), NeRF (transforms.json) and Neus (cameras_sphere.npz). The corresponding masks for the images are also included.

The reconstructed results are shown below. The dataset would be released soon.

@article{gao2024evasurf,

title={EvaSurf: Efficient View-Aware Implicit Textured Surface Reconstruction},

author={Jingnan Gao and Zhuo Chen and Yichao Yan and Bowen Pan and Zhe Wang and Jiangjing Lyu and Xiaokang Yang},

journal={IEEE Transactions on Visualization and Computer Graphics},

year={2024}

}